SELF CALIBRATION OF CAMERAS FROM DRONE FLIGHTS (PART 3)

BACKGROUND

(Reposted from https://smathermather.com/2019/12/02/self-calibration-of-cameras-from-drone-flights-part-3/)

I have been giving a lot of thought to sustainable ways to handle self calibration of cameras without undue additional time added to flights. For many projects, I have the luxury of spending a little more time to collect more data, but for larger projects, this isn’t a sustainable model. In a couple of previous posts (this one and this one), we started to address this question, pulling from the newly updated OpenDroneMap docs to highlight the recommendations there.

As I have been thinking about these recommendations, there are other more efficient ways to accomplish the same goal. Enter the calibration flight: the idea is that with some cadence, we have dedicated flights at the same height and flight speed as the larger flight in order to estimate lens distortion.

INITIAL TEST

For this testing, I chose a relatively flat but slightly undulating area in Ohio in the USA: the Oak Openings region, which is a lake bottom clay lens overlayed with sand dunes from glacial lakes. It has enough topography to be interesting, but is flat enough to be sensitive to poor elevation models.

The test area flown is ~80 acres of residences, woodlots, and farmland.

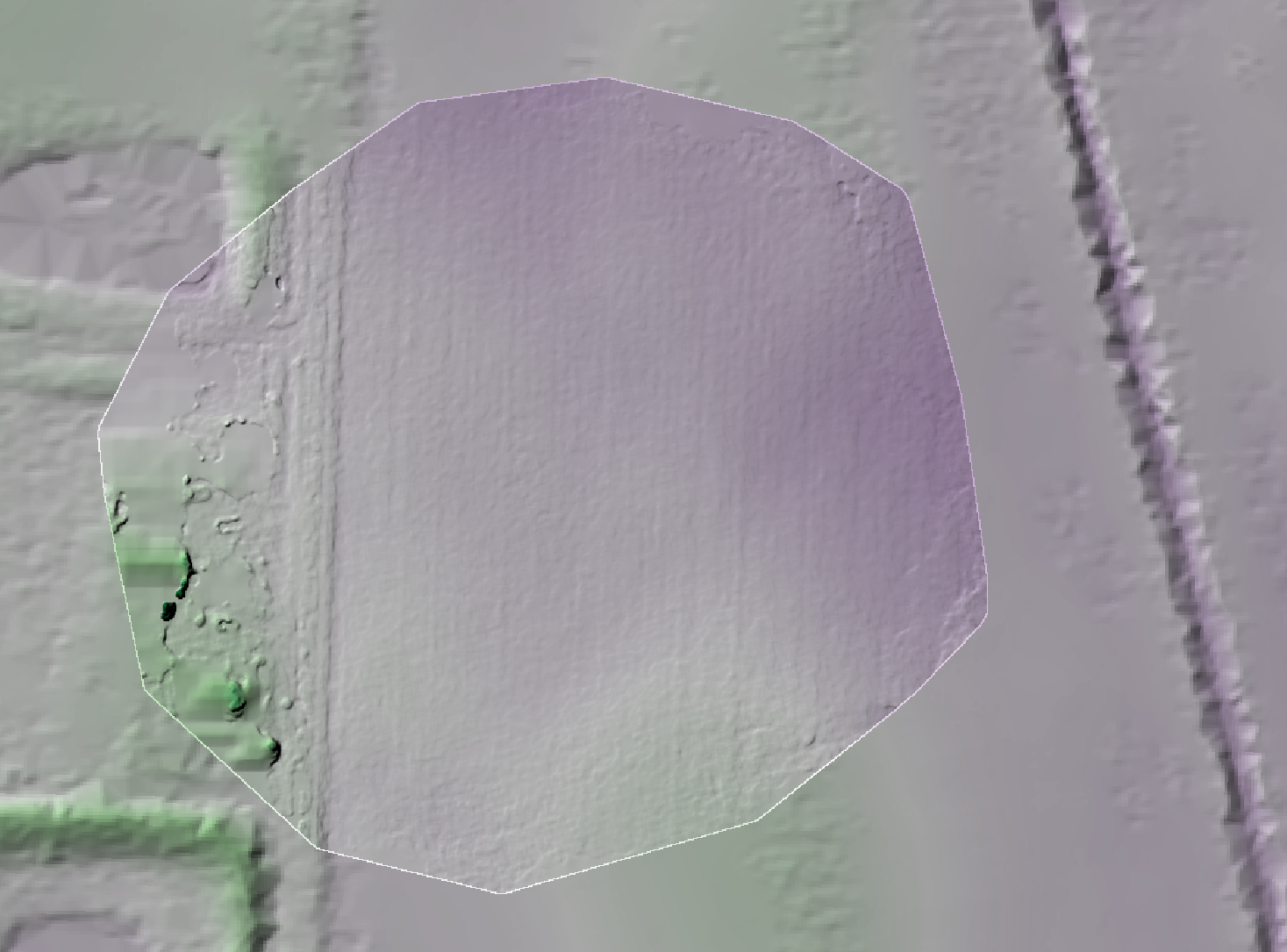

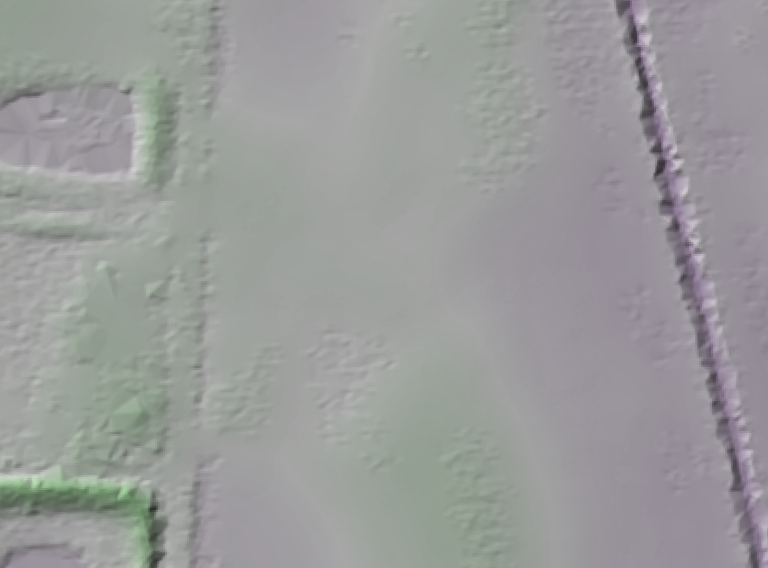

Flown with a DJI Mavic Pro which has an uncalibrated lens with movable focus, the first question I wanted to address is how much distortion do we get in our resultant elevation models if we just allow for self calibration? It turns out, we get a lot:

We have seen this in other datasets, but this forms a good baseline for our subsequent work to remove this.

Next step, we fly a calibration pattern. In this case, I plotted an area large enough to capture two passes of data, plus an orbit around the exterior of the area with the camera angled at 45° for a total of 3 minutes and 20 seconds.

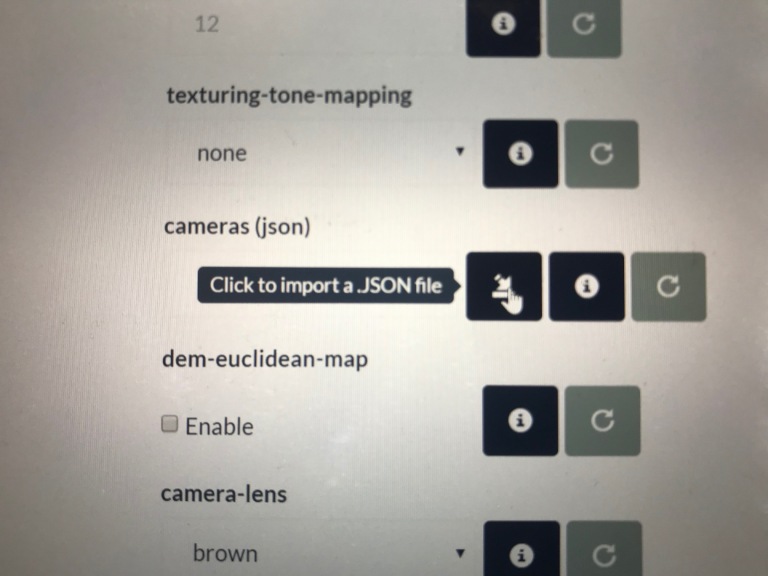

When we process this data in OpenDroneMap, we can extract the cameras.json file (either in the processing directory or we can download from WebODM) and use that in another model. We can do this using the cameras parameter on the command line or in WebODM through uploading the json file from our calibration dataset.

But, before we do that, let’s do a review of our calibration data — process it and take a look at what kind of output we get. First, we process it using defaults and evaluate the elevation model to look for artifacts that might indicated whether the calibration pattern wasn’t successful.

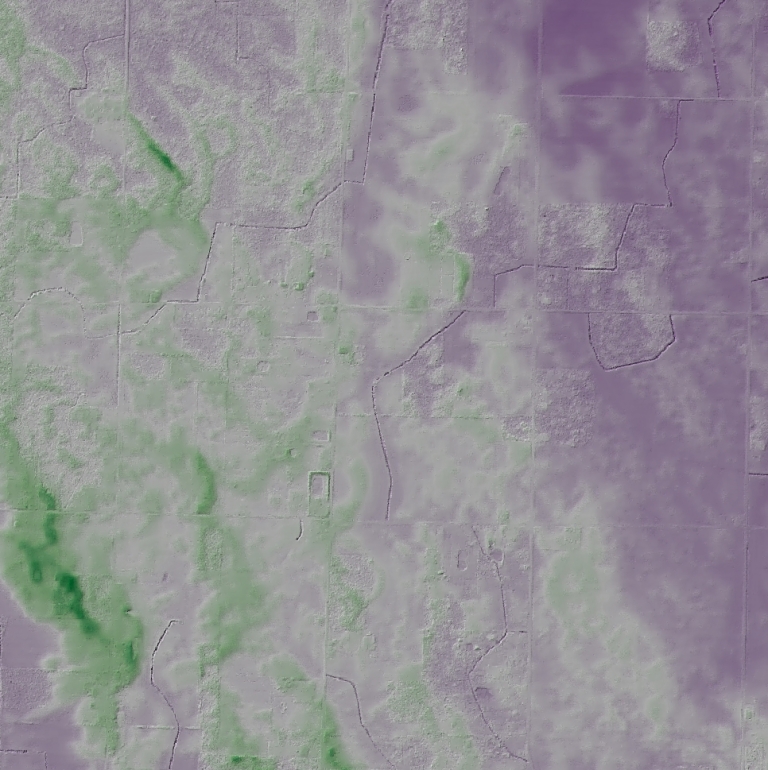

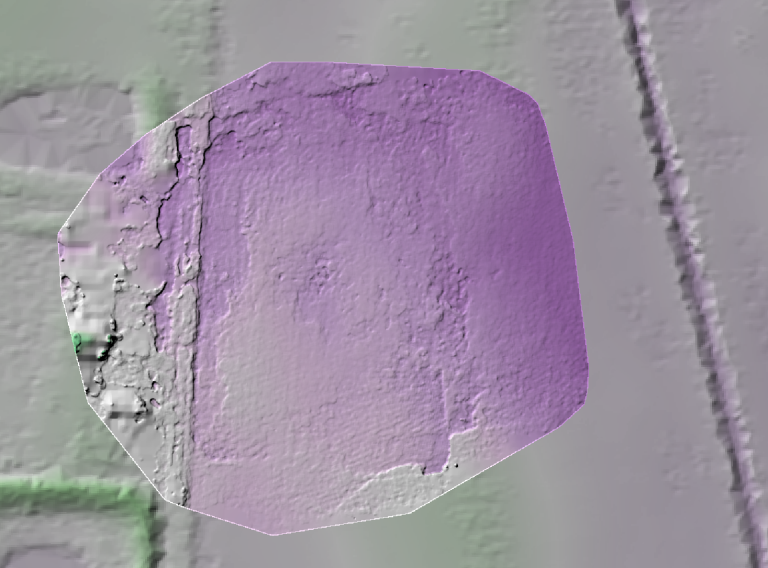

Our terrain model from the Ohio Statewide Imagery Program elevation model looks like this for our calibration area:

Note that this is mostly a moderately flat farm field with a road and small ditches running North/South in the west of the image and a deep Northeast Ohio Classic ditch in the east.

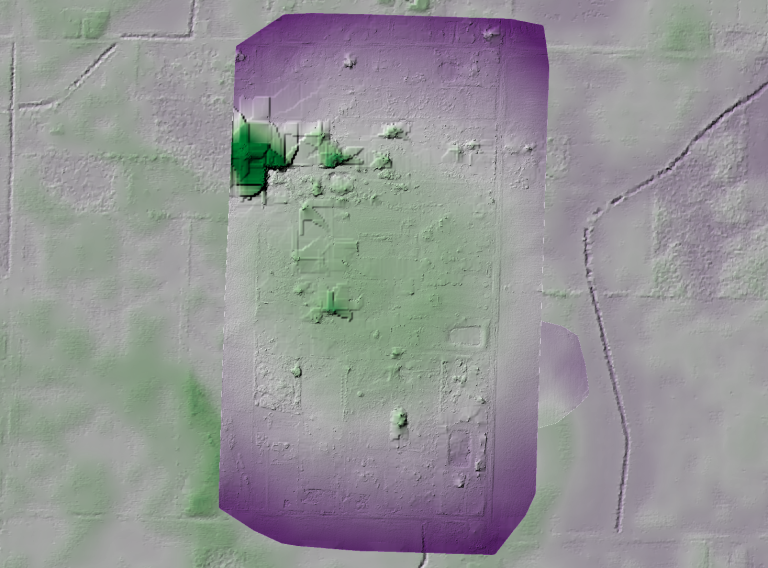

How does our data from our calibration flight look?

It’s not bad. We can see the basic structure of the landscape — from the road in the west to the gentle drop in elevation in the east.

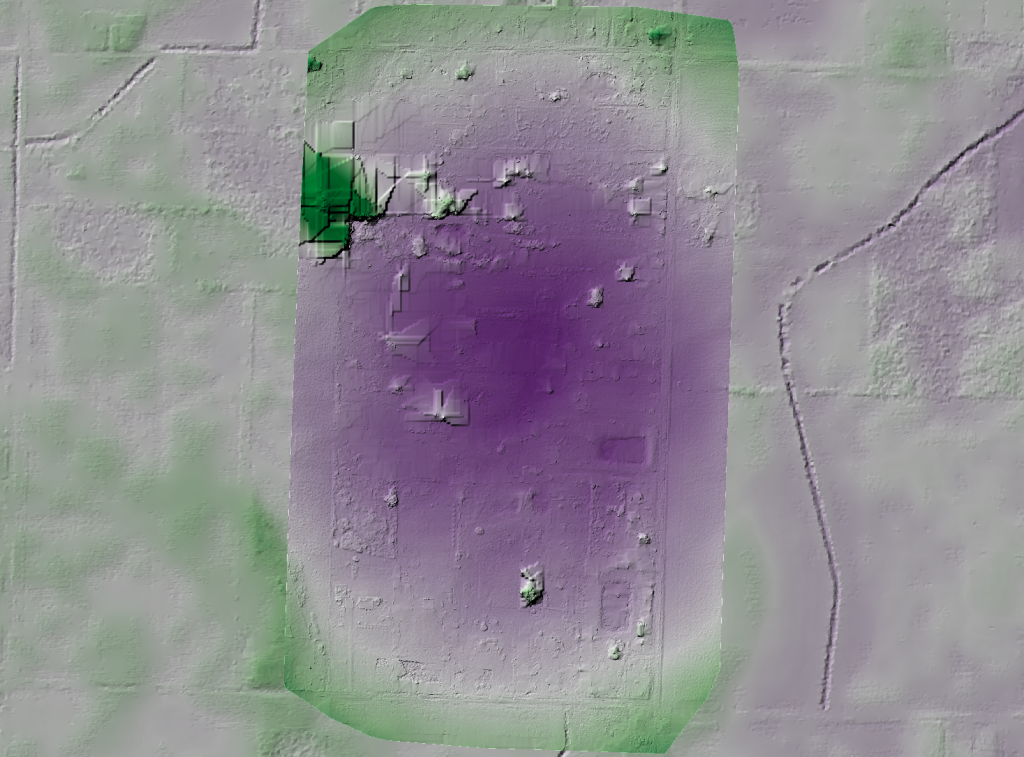

Our default camera model is a perspective camera. How does this look with the Brown–Conrady camera model that Mapillary recently introduced into OpenSfM?

With the Brown–Conrady camera model, we see additional definition of the road bed, ditches alongside the road, and even furroughs that have been ploughed into the field. For this small area, it appears the Brown–Conrady camera model is really improving our overall rendering of the digital terrain model, likely as a result of an improved structure from motion product. We even see the small rise in the field at the southern central part of the study area, and as with the default (perspective) model, the slope down toward the ditch on the east of the study area.

RESULTS

With running these both with perspective and Brown–Conrady cameras, we can apply those camera models as fixed parameters for our larger area and see what kind of results we get.

Our absolute values aren’t correct (which we expect), but the relative shape is — the dataset is now appropriately relatively flat with clear delineation of some of the sand features. This is the goal, and we have achieved it with some of the most challenging data.

How does our Brown–Conrady calibration model turn out? It did so well on the small scale, will we see similar results over the larger area?

In this case, no: the Brown–Conrady model over compensates for our distortion parameters. More tests need to be done in order to understand why. For now, I recommend using the perspective model for corrections on large datasets, and Brown–Conrady camera model on smaller datasets where the details matter, but the distortion isn’t discernible.

39